The Italian Data Protection Authority’s €15 million fine against OpenAI in 2024 represented a watershed moment. Europe has issued its first major GDPR penalty targeting generative AI, demonstrating that data protection regulators now view AI systems as priority enforcement targets.

OpenAI faced this penalty because it couldn’t establish valid legal grounds for processing personal data during ChatGPT’s training. The case demonstrates how AI’s growing sophistication creates increasingly complex regulatory challenges under GDPR requirements.

By 2025, total GDPR penalties had reached roughly €5.88 billion across all sectors. Organizations must now treat compliance as essential to business strategy. Regulators have developed deeper expertise in algorithmic processes and data handling practices, making AI systems prime candidates for enforcement action.

It’s not surprising that this feeds into the fear of universities to implement AI while processing sensitive student data. Educational institutions, which handle vast amounts of personal information, including academic records, behavioral data, and often sensitive details about minors, find themselves caught between the promise of AI-enhanced learning and the specter of regulatory penalties that could overwhelm their entire technology budgets.

This article covers:

- GDPR requirements that apply to AI systems

- Regulatory enforcement trends and patterns

- Actionable compliance strategies

These insights matter if you build AI systems, deploy third-party AI tools, or oversee data governance in AI projects. Understanding GDPR and artificial intelligence is crucial, as how well you navigate GDPR’s application to AI technology will determine whether you face regulatory penalties or preserve customer confidence.

Understanding the Legal Foundations

What is GDPR?

The General Data Protection Regulation became the toughest data protection law in the world when it launched in 2018. It completely changed how companies handle people’s personal information – not just in Europe, but everywhere. The regulation brought in new ideas like building privacy protections from the start, giving people real control over their data, and making companies prove they’re following the rules.

GDPR doesn’t care where your company is based. If you handle personal data from anyone living in the EU, these rules apply to you. The law defines personal data as anything that could identify someone, either directly or through connecting different pieces of information together. This gets tricky with AI systems because they can figure out who someone is even from data that looks completely anonymous.

For AI companies, this creates a puzzle. Modern AI systems are incredibly good at finding patterns and making connections across different data sources. Something that seems harmless – like browsing habits or purchasing patterns – can suddenly become a way to identify specific people when an AI system analyzes it. This means way more data falls under GDPR rules than most companies initially realize.

What is Artificial Intelligence (AI) in a GDPR context?

Looking at GDPR regulations, AI systems function as data processing operations. They use algorithms to analyze information, make predictions, and make decisions using personal data. This definition covers a wide range of technologies, from basic recommendation systems to complex language models that handle large volumes of personal information during both training and active use.

These systems work with personal data at several stages. During training, they learn from datasets that contain personal information. When making predictions or decisions about people, they process data during inference. Many systems also include ongoing learning features that continuously improve their performance using new data inputs.

The processing happens across different phases of the AI lifecycle. Training phases involve learning patterns from datasets containing personal details. Operational phases see the systems making real-time decisions or predictions about individuals. Adaptive learning mechanisms allow many AI systems to refine their accuracy and capabilities through continued data exposure.

The Intersection of GDPR and AI

Key compliance challenges

The convergence of GDPR and AI creates several unique compliance challenges that organizations struggle to navigate. Traditional privacy frameworks weren’t designed for systems that can learn, adapt, and make autonomous decisions about individuals.

One fundamental challenge involves establishing clear legal grounds for processing. When Meta received the largest GDPR fine in history — €1.2 billion — for inadequate data transfer safeguards, it underscored how traditional compliance approaches often fall short when applied to AI systems that process personal data across multiple jurisdictions and use cases.

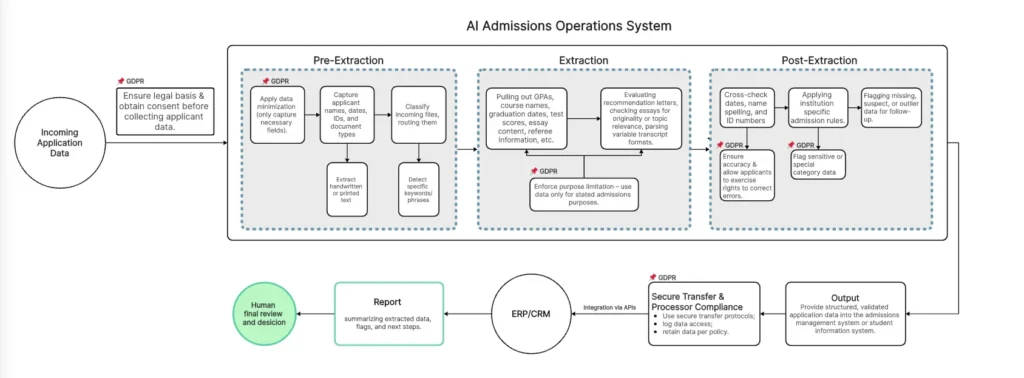

For universities and admissions teams, the same scrutiny applies to how applicant data is stored, shared across departments, and fed into AI copilots for admissions review.

GDPR principles applied to AI

When applied to AI systems, GDPR’s core principles take on new dimensions. Lawfulness, fairness, and transparency mean having clear legal grounds for processing personal data — and ensuring people understand how decisions that affect them are made. Regulators now extend fairness to include algorithmic equity, scrutinizing AI training datasets for bias.

Purpose limitation adds another layer: data collected for AI training or operations must be used only for specified, legitimate purposes. Many fines trace back to companies stretching personal data beyond its original scope — a common risk in AI, where models can generate unexpected insights.

Enforcement is already catching up. Italy’s data protection watchdog investigated AI apps simulating emotional relationships, citing concerns about sensitive data collected without valid legal grounds. Similar challenges appear in education: Italian universities, bound by strict privacy rules, face hurdles when testing AI for enrollment. As one officer at the Università di Padova explained to us in our research, “Sharing this data with an outsourced system is heavily problematic — these are not just documents, they represent money, reputation, and trust.”

The message is clear, from consumer apps to academic institutions: organizations can no longer rely on generic privacy approaches. Compliance must be designed specifically for AI, and evolve as regulators refine their expectations.

Justifiable grounds for data processing

GDPR’s basic rules get more complicated when you’re dealing with AI systems. The requirements for lawful, fair, and transparent processing mean you need solid legal reasons for using people’s data, and users have to understand how your AI makes decisions about them. Regulators are digging deeper into AI training data and looking for bias problems, showing they care about both legal compliance and whether the system treats people fairly.

The purpose limitation rule says you can only use personal data for the specific reasons you collected it. This trips up a lot of companies – they get fined for using data without proper legal backing or stretching it beyond what they originally said they’d do. AI makes this worse because these systems can pull unexpected insights from data, tempting companies to use information in ways they never planned.

Many recent penalties come from exactly this problem. Companies collect data for one thing, then their AI discovers it’s useful for something else entirely. But GDPR doesn’t care how clever your AI is – you still need permission for each specific use.

Purpose limitation and data minimization

AI systems challenge traditional approaches to purpose limitation and data minimization because they can derive value from data in ways that teams contemplated initially. Large language models trained on vast text corpora may inadvertently learn personal information, even when that purpose differs from the original data collection intent.

The principle of data minimization requires you to collect only data that is adequate, relevant, and limited to what is necessary for the specific purpose. For AI systems, this means implementing technical and organizational measures to ensure training datasets include only essential personal data and that inference processes retain only required personal information.

Transparency and explainability of AI

GDPR’s transparency requirements demand that individuals understand how their personal data is processed, including the logic involved in automated decision-making. This creates technical and practical challenges around explainability and user communication for AI systems.

Key enforcement issues:

- Failure to provide clear privacy notices explaining AI data usage has resulted in many substantial penalties

- Lack of transparency about how data is collected has become a primary focus of regulatory enforcement

- Inadequate explanation of why data is processed triggers compliance violations

- Insufficient detail about how AI systems make decisions leads to regulatory action

Based on our experience implementing AI systems across multiple industries, the most common compliance gap occurs during the transparency phase — organizations often underestimate how much detail users need about AI decision-making processes.

Rights of data subjects (access, deletion, objection)

Data subject rights under GDPR become more complex when applied to AI systems. The right of access requires you to provide individuals with information about how AI systems process their personal data, including any automated decision-making or profiling activities.

The right to erasure presents particular challenges for AI systems where personal data may be embedded in model parameters or training datasets. Technical solutions like machine unlearning provide pathways, though organizations face implementation challenges that require careful planning and resource allocation.

Security, anonymization, and pseudonymization

Poor security keeps causing big GDPR problems for AI companies. These systems churn through massive amounts of personal data, which makes them perfect targets for hackers. You need strong security from start to finish – not just basic protections.

Companies get hit with huge fines when their encryption falls short, access controls are sloppy, or they can’t properly monitor what’s happening with the data. Since AI systems handle so much information, when something goes wrong, it goes really wrong. A single breach can expose millions of people’s data, which means regulators come down hard.

The math is simple: more data processed equals bigger potential disasters. Regulators know this, so they expect AI companies to have security measures that match the scale of data they’re handling.

As one Enrollment Advisor at Universidad Europea noted: “We see potential for AI in areas like document classification, fraud detection, chat-based applicant support, and even predictive modeling to identify high-potential candidates. However, we’d need to ensure any solution complies with data protection laws (like GDPR) and integrates well with our existing systems (CRM, SIS, etc.).”

This reflects a growing industry view that security and compliance must be built into AI adoption from the very start, not treated as afterthoughts.

Best Practices for Ensuring AI Compliance with GDPR

1. Embed privacy by design in AI development

Privacy by design stops companies from slapping privacy protections onto their AI systems at the last minute. Instead, you build privacy into every step – from picking your training data to watching how the system performs after launch.

The principle is simple: privacy protections should function automatically, not as optional features users must activate. For AI systems, this can include techniques like differential privacy during model training, limiting data retention to what’s strictly necessary, and giving users clear, accessible controls over their information.

At AnyforSoft, this approach is built into our methodology:

- Privacy-first requirements: protections are considered from the earliest project stages.

- Explainable, auditable stack: we favor technologies with structured logging, GDPR-friendly storage, and modular AI components that can be isolated or replaced if compliance rules change.

- Client ownership of data: our systems ensure that data always belongs to the client.

- Seamless integration: AI features are embedded directly into the client’s environment, avoiding external dependencies that could compromise compliance.

By embedding these principles throughout the development cycle, we help ensure AI systems remain effective and compliant, even as regulatory expectations evolve.

2. Conduct Data Protection Impact Assessments (DPIAs)

Data Protection Impact Assessments (DPIAs) help identify privacy risks in AI systems before launch. Under GDPR, they’re mandatory whenever processing could seriously affect individuals, which includes most AI systems that make automated decisions or handle large volumes of personal data.

A thorough DPIA should examine the full lifecycle of data:

- Collection – how training data is sourced and validated.

- Processing – how the model handles information and generates predictions.

- Impact – whether automated outputs could meaningfully affect people’s rights, opportunities, or lives.

Focusing on just one stage is not enough. The assessment needs to map the entire journey — from datasets used for training, through processing logic, to the consequences of decisions. Catching issues early makes it possible to adjust without costly rebuilds later.

At AnyforSoft, we help organizations prevent risks even if formal DPIAs aren’t in place yet. When we build an admissions co-pilot, a test prep platform, or a media content system, we don’t just ask “what should the AI do?” — we map the entire data journey:

- Ownership – who controls the records.

- Storage – where and how the data is kept.

- Accountability – what happens if the model gets it wrong?

By surfacing risks in week one instead of month twelve, privacy becomes a proactive part of development, not a late-stage obstacle.

3. Define clear data governance policies

Effective data governance provides the organizational foundation for GDPR compliance in AI systems. Policies should clearly cover:

- Data collection – defining what data is necessary and how it is obtained.

- Data quality management ensures the accuracy and consistency of inputs.

- Access controls – specifying who can view or modify data.

- Retention and deletion – defining how long data is stored and secure disposal procedures.

Data governance should also assign clear roles and responsibilities for AI data management, including data controllers, processors, and third-party AI service providers. The distributed nature of AI development — with multiple internal teams and external partners — requires particular attention to data sharing agreements and processing records.

While data governance is formally the customer’s responsibility, at AnyforSoft, we assist clients in documenting, structuring, and enforcing governance as part of delivery. For example, we can map and record how data flows through AI systems, ensuring that policies are clear, actionable, and aligned with regulatory requirements.

4. Ensure human oversight of automated decisions

GDPR requires that individuals have the right to avoid decisions based solely on automated processing that produce legal effects or significantly affect them. For AI systems making such decisions, you must implement meaningful human oversight capabilities.

Human oversight goes beyond having a human in the loop — it requires that human reviewers have the information, tools, and authority needed to meaningfully evaluate and override AI system decisions. This includes access to decision-making rationales, relevant personal data, and alternative assessment approaches.

At AnyforSoft, we embed this principle directly into our AI solutions. In the High Pass Education system, AI analyzes learners’ answers, collects insights, drafts new questions based on a proprietary book of ethics, and generates new questions — all under careful human supervision.

This way, automated outputs are constantly reviewed and validated before affecting learners, combining AI efficiency with regulatory compliance.

5. Maintain transparent user communication

Transparency requirements under GDPR demand clear communication about AI system operations, but technical complexity should enhance rather than hinder comprehensible privacy notices. You must develop communication strategies that make AI processing understandable to individuals without technical expertise.

Privacy notices for AI systems should explain in plain language what personal data is collected, how AI systems use it, what decisions or predictions the systems make, and how individuals can exercise their rights. Visual aids, examples, and layered privacy notices can help make complex AI processes more accessible.

Yes, explaining neural networks to your grandmother remains challenging—but clear communication about data usage and decision-making processes is achievable and legally required.

6. Implement continuous GDPR monitoring and auditing

AI systems require ongoing monitoring because their behavior can change as they learn from new data or their operating environment evolves. Static compliance assessments conducted during development may fail to capture AI privacy risks that emerge during operational use.

To address this, practices ensure development implementation is reinforced with continuous monitoring that tracks key privacy metrics, including data processing volumes, decision-making patterns, user rights requests, and security incidents. Regular audits should assess technical compliance measures and organizational processes, such as data subject rights handling and incident response procedures.

GDPR vs. the EU AI Act

Shared and diverging principles

GDPR and the new EU AI Act work together in some ways but clash in others, creating headaches for companies trying to follow both sets of rules. They both want transparency, accountability, and protection for individuals, but they go about it differently and have separate enforcement systems.

GDPR cares about protecting people’s personal data from start to finish. The AI Act worries about bigger picture stuff – whether AI systems are safe, whether they violate fundamental rights, and what they mean for society overall. The problem comes when AI systems use personal data, which is pretty much every commercial AI system out there.

So you end up with two different regulators potentially looking at the same AI system for different reasons. One wants to know if you’re handling personal data properly, while the other wants to know if your AI system poses broader risks to people and society.

This creates a compliance mess where following one law perfectly might put you at odds with the other.

Legal obligations and enforcement

The AI Act can hit you with fines up to €35 million or 7% of your worldwide revenue for banned AI practices – that’s potentially worse than GDPR’s worst-case penalties. Now you’re dealing with two separate fine structures that can both apply to the same system.

High-risk AI systems under the AI Act need quality management processes, detailed technical docs, and ongoing monitoring after you launch them. But here’s the kicker – these requirements don’t let you off the hook for GDPR. You still need to follow all the personal data rules on top of everything else.

So if your AI system handles personal data and falls into the high-risk category, you’re juggling two sets of compliance demands. Miss either one and you could face serious financial consequences from completely different regulators.

Sectoral Considerations and Use Cases

Public sector and generative AI

Government use of AI tools like ChatGPT gets way more attention from regulators because of GDPR rules and extra laws that apply to public bodies. When Italy banned ChatGPT, they were worried about three main things:

- How the system handled kids’ data

- Complete failure to check users’ ages

- Weak privacy protections overall

Government agencies face tougher rules than private companies when they use AI to process citizen data. They have to be more transparent and accountable because they’re handling public information. Plus, government AI systems often deal with the most sensitive stuff – medical records, criminal histories, financial data – which triggers the strongest GDPR protections.

This means public sector AI projects face a much higher bar for AI data protection. Citizens expect their governments to protect their data better than private companies do, and regulators enforce that expectation aggressively. A privacy mistake that might get a private company a warning could shut down a government AI program entirely.

Industry-specific examples (Healthcare, Education)

Healthcare AI systems process some of the most sensitive personal data categories under GDPR, triggering enhanced protection requirements and strict consent or legitimate interest justifications. Enforcement trends show increasing regulatory attention to healthcare AI systems, particularly those making diagnostic or treatment recommendations.

Educational institutions face equally complex challenges when implementing AI systems that process student data. The sector’s unique position — handling extensive personal information about individuals who are often minors — creates particularly stringent compliance requirements under GDPR.

Our research into AI adoption in education reveals the practical tensions institutions navigate. As one Enrollment Advisor at Universidad Europea explained:

“There is strong potential for AI in areas such as document classification, fraud detection, chat-based applicant support, and predictive modeling to identify high-potential candidates. At the same time, any solution must comply with data protection regulations (like GDPR) and integrate seamlessly with existing systems (CRM, SIS, etc.).”

Both healthcare and education sectors share a fundamental challenge: their AI systems make high-stakes decisions about people using sensitive personal information. In healthcare, incorrect AI-driven decisions can delay critical treatments. In education, biased algorithms can unfairly exclude qualified candidates or misclassify student needs. When these systems fail, the consequences extend beyond compliance violations, affecting individual life outcomes and institutional reputation.

AI ethics and bias in data processing

Biased AI creates problems that go way beyond basic data protection rules. When AI systems are biased, they break GDPR’s compliance and can discriminate against people in ways that violate their basic rights.

Training data often carries old prejudices and stereotypes. If you train AI on this biased data, your system will keep discriminating against the same groups of people that faced discrimination in the past. It’s like baking unfairness directly into your technology.

The right to explanation becomes crucial here. People need to understand how AI systems make decisions about them, especially when those decisions might be unfair. If someone gets turned down for a loan or flagged by a security system, they should be able to find out whether the AI treated them fairly or whether bias played a role.

This isn’t just about following privacy laws anymore – it’s about making sure AI doesn’t become a tool for systematic discrimination.

Conclusion

Combining AI with GDPR rules creates some of the toughest compliance headaches companies face. As AI becomes smarter and more widespread, regulators will keep watching more closely, and penalties will keep getting steeper. Having clear explainers on GDPR compliance becomes essential for any organization using AI.

The key is to build privacy protections into your AI from day one, not trying to add them later. Companies that bake privacy into their design process, run proper risk assessments, set up solid data governance standards, and keep users informed about what’s happening will stay ahead of compliance problems and maintain customer trust.

The technical hurdles are real, but they’re not insurmountable. Privacy-boosting technologies, AI systems that can explain their decisions, and strong oversight frameworks allow you to build AI that protects people’s privacy while still delivering business results.

Our team has solutions if you’re leading AI projects and need help meeting GDPR compliance. Check out how AnyforSoft helps companies balance innovation with data protection requirements.